Artificial intelligence and machine learning are currently one of the hottest buzzwords in the tech world — with good reason. With the tech giants - Netflix, Amazon, and Google - adopting it holistically, it is interwoven into our lives as customers, more than we could ever imagine.

In the last few years, we've seen numerous technologies previously in the realm of science fiction transform into reality. Experts look at artificial intelligence as a production factor that can introduce new sources of growth and convert the way work is done across industries.

A report by Accenture says that AI technologies could increase labor productivity by 40% or more by 2035. This may double economic growth in 12 developed nations that continue to draw talented and experienced professionals to work in this field.

With the current advances in computing power, migration to cloud computing and a decrease in storage costs, these technologies have expanded their presence beyond the major tech companies. While ML has been around for decades, it was only recently identified as a tool to transform businesses. Without a doubt, ML and AI can help companies achieve more by increasing productivity and efficiency.

As per Gartner's 2019 CIO Agenda survey, the percentage of organizations adopting AI jumped from four to 14% between 2018 and 2019. Given the benefits, AI and machine learning (ML) enable risk assessment, business analysis, and R&D — and the resulting cost savings — Artificial Intelligence implementation will continue to rise in 2020.

Let's Take A Deep Dive Into The Concepts Of Artificial Intelligence And Machine Learning.

What Is Artificial Intelligence?

AI is a branch of computer science that endeavors to simulate or replicate human intelligence in a machine so that machines can perform tasks that generally require human intelligence. Some programmable functions of Artificial Intelligence systems include planning, learning, reasoning, problem-solving, and decision making. AI systems are powered by algorithms, utilizing techniques like - deep learning, machine learning, and more. ML algorithms feed computer data to AI systems, using statistical methods to allow Artificial Intelligence systems to learn.

Through ML, Artificial Intelligence systems get progressively better at tasks without having to be specifically programmed to do so. AI technologies have transformed businesses' capabilities globally, allowing humans to automate previously time-taking tasks and gain untapped insights into their data via rapid pattern recognition.

Three Types Of Artificial Intelligence (AI)

Narrow AI

It is the only type of AI we have successfully realized to date. Narrow AI is designed to perform singular tasks, goal-oriented - i.e. speech recognition/voice assistants, facial recognition, searching the internet or driving a car - and is very intelligent at concluding the determined task it is programmed to do. While these machines may seem clever, they operate under a narrow set of limitations and constraints, and that is the reason why this type is commonly known as weak AI. Narrow Artificial Intelligence doesn't mimic or replicate human intelligence; it merely simulates human behavior based on a narrow range of parameters and contexts.

Artificial General Intelligence (AGI)

It is referred to as strong Artificial Intelligence or deep AI, it's the concept of a machine with general intelligence that mimics human behaviors or intelligence, with the capability to apply and learn its intelligence to solve any problem. AGI can think, understand, and act in an indistinguishable way from that of a human in any particular situation. AI scientists and researchers have not yet gotten close to strong AI. To succeed, they would need to figure out a way to make machines conscious, programming a complete cognitive ability set. Machines would have to take learning to the next level, not only enhancing efficiency on singular tasks but obtaining the capabilities to apply experiential knowledge to a broader range of different issues. Strong AI uses a theory of mind Artificial Intelligence framework, which refers to the capability to discern emotions, needs, beliefs, and thought processes of other intelligent entities. The theory of mind-level AI is not about simulation or replication; it's about training machines to understand humans truly.

Artificial Super Intelligence

It is the hypothetical Artificial Intelligence that doesn't just understand human intelligence and behavior or mimic; ASI is where machines become more self-aware and surpass the capacity of human ability and intelligence. The concept of ASI sees Artificial Intelligence evolve to be so akin to human experiences and emotions that it does not just understand them; it evokes emotions, beliefs, needs, and desires of its own. Additionally, replicating the multi-faceted human intelligence, ASI would theoretically be exceedingly far better at everything such as- science, math, sports, art, emotional relationships, medicine, hobbies, everything. ASI would have greater memory and a faster ability to process and analyze data and stimuli. Consequently, the decision-making and problem-solving capabilities of super-intelligent beings would be far superior to those of human beings.

What Is Machine Learning?

Machine Learning is a method of data analysis that automates analytical model building. It's a domain that utilizes algorithms to learn from data and make predictions. It is an application of AI that allows systems with the ability to improve and understand from experience without being explicitly programmed automatically. Machine Learning puts more focus on developing computer programs that can access data and use it to learn.

Realistically, this means that we can feed data into an algorithm and use it to make predictions regarding what might happen in future. It is a branch of AI based on the intention that systems can master and learn from data, make decisions, and identify patterns with minimal human intervention. Now that we know about the technology, let's go through its techniques.

Techniques Of Machine Learning

#1 Supervised Learning

Supervised learning is the most well-known paradigm for machine learning. It is effective in numerous business scenarios like- sales forecasting, fraud detection, and inventory optimization. Supervised learning works by catering known historical input and output data into Machine Learning algorithms. In every step, after processing the input-output pair, the algorithm alters the model to create an output that is pretty close to the expected result.

Supervised learning can be utilized to make predictions, classify or recognize data.

For instance, a model could be fed data from thousands of bank transactions, with each transaction labeled as fraudulent or not. The model will recognize patterns that led to a "fraudulent" or "not The model learns to make more accurate predictions.

Input and output data can be procured from historical data through simulations or human data labelling. In cases involving unstructured data, such as- images, video, audio or text, specific categorizations or properties can serve as output data. Supervised learning can be utilized to make predictions, recognize data or classify it.

Examples use cases for supervised learning include:

#2 Unsupervised Learning

Unsupervised learning is utilized to develop predictive models from data that consists of input data without historically labeled responses. For instance, a set of unlabeled photos or a list of customers could serve as input data in an unsupervised learning use case. The most frequent applications of unsupervised learning are association problems and clustering. Clustering produces a model that groups objects based on specific properties like color. Organizations take those clusters and identify rules that exist between them.

Examples of use cases for unsupervised learning include:

Unsupervised learning can also be utilized to prepare data for successive supervised learning. This is done by identifying features or patterns that can be used to categorize, compress and reduce the dimensionality of data.

How Organizations Are Adopting AI And ML Technologies

Here are a couple of significant areas that a company needs to look into while thinking of adopting AI/ML.

# First, it needs to undergo a cultural change where all groups and departments understand the significance of data and how it can assist them. The organization needs to develop a data strategy to make sure that data is managed like an asset. It requires identifying, regulating, and standardizing the data sources, so employees understand the possessed data and the "source of truth" for their business. Knowing what they have will help them know what they need to address through their business objectives. Employees should enhance their data literacy, and some will need to accelerate their current skill set to get prepared to work directly on ML technology.

# Secondly, organizations need to adhere to the instances where AI/ML can add the most value to the system. With every facet of business being qualified for a potential change, it is challenging to identify a suitable business use case. The most famous use cases often revolve around prediction, classification, and decision-making with a higher certainty level. With Machine Learning, organizations can achieve these objectives faster and with sheer accuracy. This needs clean and accurate data, and it completely points towards planning an exhaustive data strategy. This not only assists to make informed decisions more swiftly but also helps in realizing opportunities rapidly so companies can act on them to get better results.

# Finally, it's essential to look out for organizational fatigue in such a big undertaking. Often, a company gets stagnant - between identifying the proper use case, promoting it to production, creating an ML/AI model, and scaling beyond pilot requirements. The entire process can be time-consuming in some cases. One of the ways to avoid being overwhelmed and break out of stagnation is to try to keep projects precise and keep iterating.

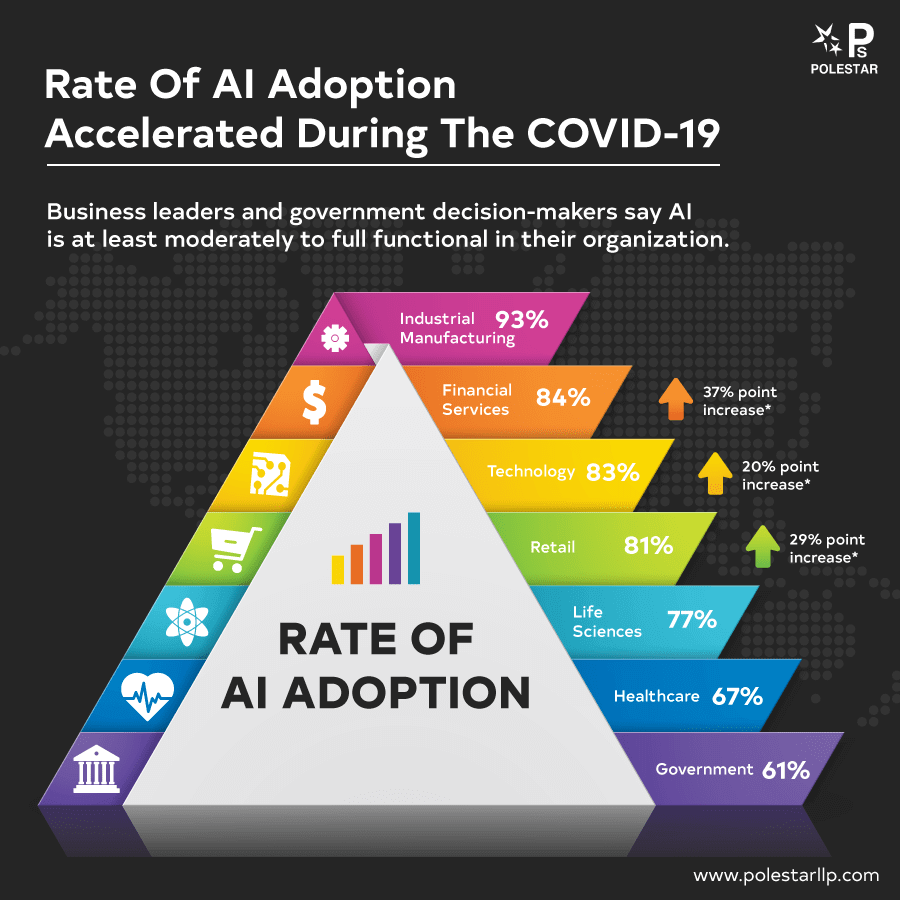

You may go through this chart to know how business leaders are confident in AI’s ability to solve major industry problems and the adoption of AI accelerated during the pandemic.

Let's Look At Key Technology Trends 2021 For AI And ML

In 2021, AI and ML technologies will continue to become more mainstream. Organizations that haven't traditionally viewed themselves as candidates for Artificial Intelligence applications will start to embrace these technologies as they become both more easy and more affordable to implement. The following is a gist on each key technology trend:

Fraud/Anomaly Detection and automating inputs

Machine Learning-based applications have evolved from email spam and malware filtering to predicting and recommending actions. 2020 will see companies benefiting from Machine Learning/Deep Learning's real-time financial applications such as fraud/anomaly detection, self-auditing systems and suggestions based on past orders/transactions. The consolidation of operations will prevent leakage and mistakes.

Improving employee (Millennial) experience

While chatbots became mainstream for customer engagement in 2019, 2020 will elevate employee experience in the race to attract and retain millennial talent. Millennials value more work-life balance, quick integration with the work culture and less 'boring' corporate functions. AI/ML and Natural Language Processing chatbots can help win over millennials while improving workplace productivity.

Facial recognition to improve attendance and workplace access

2020 will come across notable workplace adoption of facial recognition, especially for customer-centric and cybersecurity functions. Enterprises that adopt this capability, especially those with sizable workforces, will reduce, if not eliminate, the monotony of keeping track of employees. It will also solve numerous issues related to passwords and access cards, saving even more time and costs.

Image recognition-based identification

The AI-based deep learning technique and machine vision technology are also being leveraged in tasks concerned with the defect detection and quality inspection. For instance, in the aviation or textile industry, AI-infused image recognition allows companies to detect common defects. With a precision of 90% detection rate, the application will automate the complete quality inspection process in an organization and offer real-time data to make timely decisions.

Conclusion

Therefore, we can see that the growth of intelligent systems at the edge is likely to be faster and more profound. As enterprises become software-driven to deliver on the needs of systems and customers, the intelligent systems world becomes the expected norm.

Polestar Solutions is well-established in artificial intelligence/machine learning and helps you unearth new digital solutions to elevate your digital transformation efforts. Get in touch.