If your business operations are subject to a higher “Cost of Delay’, then you already know the importance of real-time data streaming and it’s analysis for business insights. But, in an environment where ‘Agility’ is as important as ‘Innovation’, real-time stream analytics is seeing a widespread and proven adoption.

What is Streaming Analytics?

Streaming Analytics is the processing and analysis of large pools of “in-motion” data i.e. performing actions on real-time data using continuous queries, unlike the batch-wise data processing which sometimes has out-of-date information. With streaming, analytics businesses can benefit from data in motion to eliminate lost opportunities and create new ones.

Before we understand the traditional and modern architecture of Stream Analytics along with the top 5 platforms or tools to perform Data Stream Analytics, understanding the use cases of streaming analytics to perform big data real time analysis.

Some Of The Popular Use Cases Of Data Stream Analytics Involve:

- Detecting and predicting disruptive behaviour of any type of machinery or vehicles based on the data transmitted from IoT sensors and devices.

- Real-time recommendations, empowered with geographic preferences to a user browsing through mobile or web interface of e-commerce, streaming video or a travel portal.

- Online gaming companies are offering real and engaging experiences in a virtual world.

- Financial portfolio managers adjust their holding based on real-time risk analysis on stock exchange data streams.

- Social media and other user interaction platforms, sifting through the bytes of data to the scale of quintillions, generated each day to safeguard users against content that could be categorised as fraudulent, bullying, violent or offensive in any way.

- Continued monitoring of patient health to ensure timely treatment

- Retail and hospitality companies can quickly respond to customer queries such as fresh orders, cart additions, return queries or complaints.

Traditional Streaming Data & Analytics Architecture:

The traditional approach is one with patching together multiple tools to serve the purpose. It typically involved four steps. Firstly, the data needs to be sequentially streamed from the source without any disruption; a stream processor takes care of this.

Secondly, the data needs to be extracted from these multiple stream processors and then, transformed and loaded - done through an ETL tool.

Thirdly, the data is loaded into a streaming data storage, typically a data lake owing to it’s an inexpensive and flexible nature as compared to other options.

Lastly, perhaps the most crucial step - an analytical system to make sense of this voluminous data. Business users want insights post-analytical/ statistical operations like sampling, clustering, correlations and more.

This approach needs constant monitoring of the servers, lookout for software updates or installation and managing the scale up or scale down requirements. A significant amount of time goes into setting up the basics and starting to receive the data streams.

Avail stream analytics solutions to make data more organized, useful, and accessible from the instant it’s generated

Modern Architecture And The Leading Platforms:

The modern approach to data stream analytics is more aligned with the business values of delivering fast and self-service capabilities while reducing the dependence on the IT departments for every small or mundane activity.

These platforms offer a one-stop solution to process, store and convert event streams into analytics-ready data and business insights subsequently. These powerful in-memory engines are available as PaaS offerings, so you do not have to worry about provisioning any hardware or managing servers.

In terms of security, these platforms score big as they do not store any data, since most of the processing is done in-memory, and even then, the data is encrypted. On the flexibility front, they offer users to augment the capabilities of their environment with open-source and 3rd party tools as well.

Talking about the performance, the leading streaming data platforms offer unlimited scalability to process millions of streams or events per second with ultra-low latencies.

Here Is Our Top 5 Picks For Stream Analytics Platforms:

Azure Stream Analytics:

This offering by Microsoft, the leading enterprise technology software provider, is one of the most capable streaming analytics platforms. Azure stream analytics combines the power of SQL, C# and JavaScript, along with its in-built machine learning capabilities, enable companies to analyse the multi-stream data parallelly and efficiently.

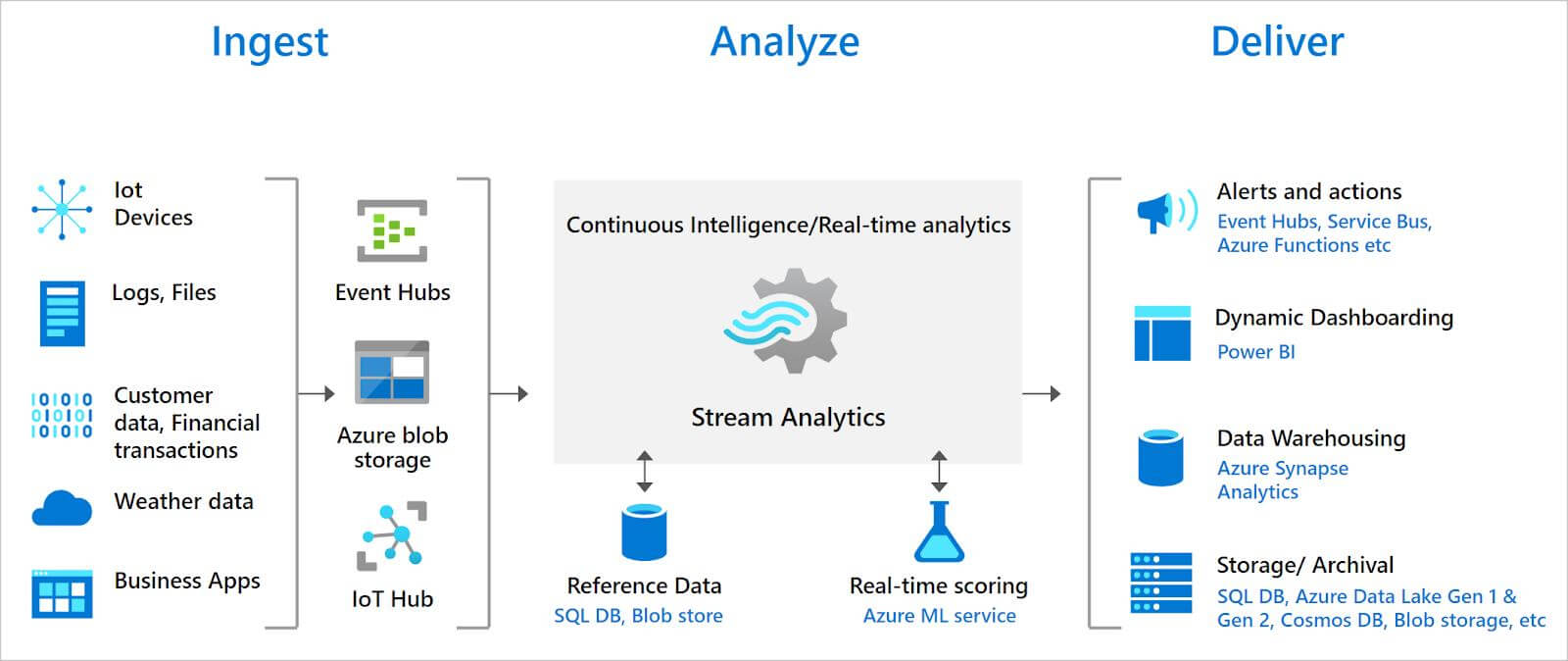

Here is the architecture behind the workings of Microsoft Azure Stream Analytics:

Source: Microsoft Docs

Simply put, it comprises 1). Input from the Azure Event Hub, Azure IoT Hub, or Azure Blob Storage. 2). An SQL query that is used to easily filter, join, sort and aggregate the incoming data streams over a period. 3). The output, one or multiple outputs are received from the processed data, which can have a variety of applications.

Amazon Kinesis

Amazon Kinesis is a platform that fits perfectly with the leading Cloud platform AWS with support for other cloud platforms as well. It makes streaming big data with AWS, seamless. The best part is its support for open-source Java libraries and SQL editor.

The Amazon Kinesis flexibility enables enterprises in setting up some basic insights and reports in the beginning and scaling up later, depending on the expertise, to deploy machine learning models for identifying patterns and automating insights.

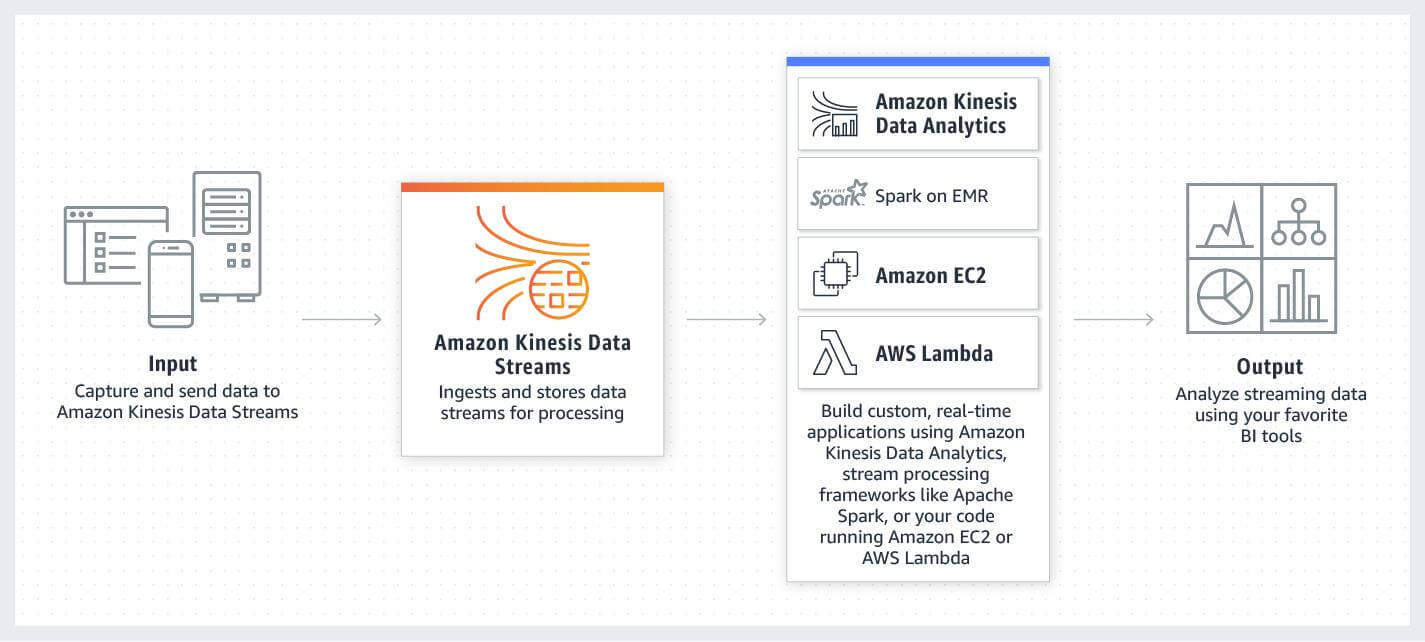

Here is a typical data stream analytics architecture with Amazon Kinesis.

Source: AWS

Google Cloud DataFlow

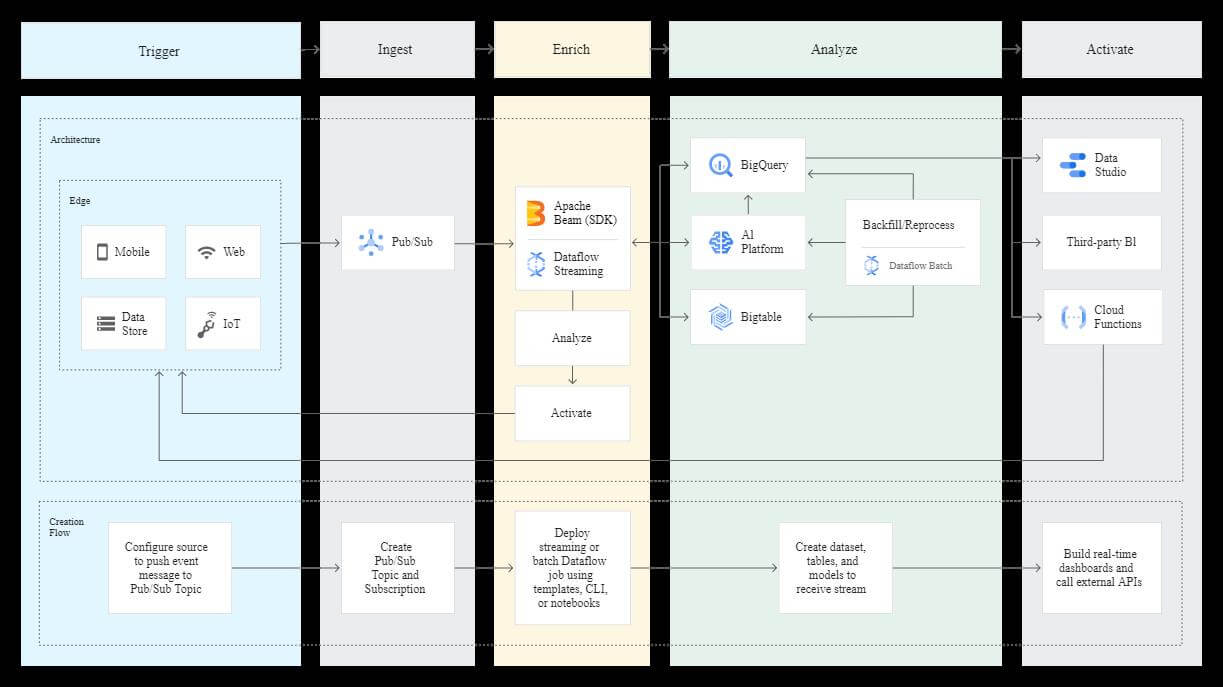

This is a recent addition to Google’s Stream Analytics. The platform is equipped with Python 3 and open-source Apache Beam SDK to offer the equal capability to process streams and batch data. The combination of 1). Apache Beam and Python 3 is used to define data pipelines and extract data from various Edge sources, 2). Pub/Sub-based ingest, and 3). Google Big Query to extract meaningful insights in real-time, makes it one of the best amongst the stream analytics tools. This fragmented provisioning eliminates the accessibility complexities and makes the data available for both data engineers and analysts.

Here is a typical data stream analytics architecture with Google Cloud DataFlow

Source: Google Cloud

Apache Kafka

Apache Kafka can be deployed on-premise as well as on the cloud. It is a chain of distributed servers and clients that communicate via TCP network protocol. The platform is fairly new but has received constant mention amongst the powerful tools for stream analytics because of its ability to send data to other data analytics or BI platforms. It finds its use primarily at the backend for the integration of microservices and all the major real-time streaming data platforms.

It runs as a cluster involving a number of servers, spread across datacenters. The data streams from these servers are categorized and stored as ‘topics’ marked by unique identifiers consisting of a key, a value and the timestamp of the stream.

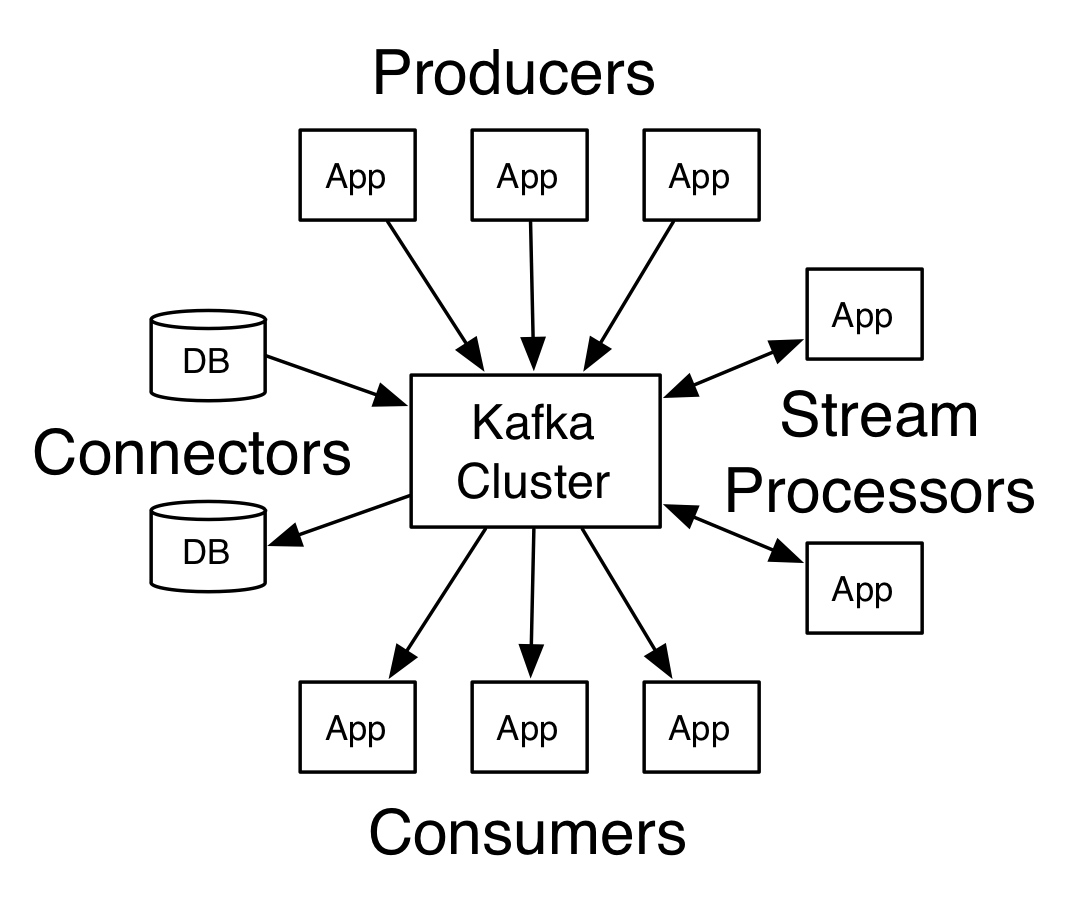

There are four core APIs; this image highlights each:

Source: Apache Kafka

Producer API is used to publish the stream of data to one or more Kafka ‘topics’

Consumer API allows the Apps to subscribe to a number of ‘topics’ & process the stream of records delivered to them

Streams API allows the Apps to act as stream processor to convert input stream to output topics or results

Connector API builds reusable producers or consumers that connect ‘topics’ to existing Apps and data systems.

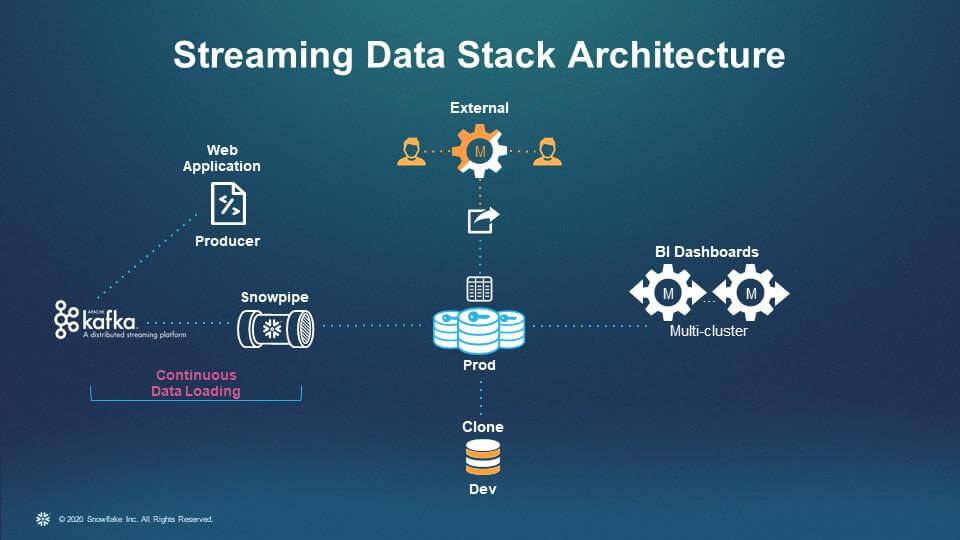

Snowflake Snowpipe:

The last on our list is an offering from a platform that recently created a buzz in the market with its biggest ever tech IPO and quite rightly so - Snowflake. The platform offers some powerful, unmatched capabilities along with significant cost benefits.

Snowflake Snowpipe automates the loading of data streams into the S3 staging area then into Snowflake. It also creates separate servers for the incoming streams from the customer environment to isolate the workload and you only pay for the server time you use.

Here is a typical data stream analytics architecture with Snowflake Snowpipe.

Experience real-time actionable business insight on streaming data and automate actions to drive today’s agile businesses

Conclusion:

There are numerous other platforms such as IBM Stream Analytics, Apache Storm, and Apache Flink that offer powerful architectures to ingest, transform and analyze the incoming data streams in real-time. The important thing to notice here is the value that stream Analytics generates for enterprises of all sizes - whether yours is a complex setup spread over geographies or you are a new-age tech startup that requires responding or addressing customer queries quickly.

If you need help in identifying the best Stream Analytics tech-stack for your organisation or you need help setting it up, book a free session with our Solution Architects to expedite the process.